On 30.04.2020 pc-kombo saw a downtime of 1h15m. Both the PC hardware recommender including the benchmark and this blog were down. Being down completely would be critical for many sites and services, for this site it was not that bad because the amount of visitors hit was probably small. Still, that downtime was during the first period pc-kombo did some paid advertising, so it wasn’t perfect timing. But I wanted the crisis to be useful, so I created a postmortem. How to react to such a downtime, what worked, what did not, which lessons can be learned? I hope that sharing that experience will be useful to some of you.

Three sections: First some background information, then an uncommented log made during the events of how the downtime played out, finally some words about the lessons learned.

Context: What started the downtime

The downtime was started manually by my own action. Some time ago, Scaleway sent out this mail:

C2 & ARM64 Instances end-of-life — Migrate now to Virtual Instances & Bare Metal cloud servers

Dear customer,

As of December 1 st, 2020, our C2 and ARM64 Instances will reach their end-of-life. The physical servers hosting them are indeed randomly affected by several stability issues, which prevent us from fully guaranteeing the overall quality of service. Rest assured, however, that we are committed to guiding you in your migration and provide you with the best matching resources for improved stability, performance and reliability.

Starting from April 14th, 2020, it is no longer possible to create new C2 and ARM64 Instances. In addition, their support will end on July 1st, 2020. As a result, our technical assistance will no longer address issues related to those instances. A price increase is also to be expected on July 1st, 2020. Lastly, C2 and ARM64 Instances will be completely deprovisioned as of December 1st, 2020.

pc-kombo did run on a C2 instance, so it had to move, the sooner the better. I powered down the pc-kombo C2 instance to make a snapshot. The plan was to power it back on, create a new and different instance, recreate the site there from the snapshot while in parallel the old C2 instance served visitors. That did not work. The C2 instance never powered back on.

Event log

15:10: Powering down the C2 instance to make a backup

15:15: C2 instance does not restart. Trying again.

15:25: Create a new vanilla DEV-M instance. Starts up fine.

15:25: Create a new DEV-L instance with the backup set as image. Does not come online.

15:35: Begin manual restore of application files from dev setup to the DEV-M instance.

15:40: Begin downloading external Borg backup (which also includes application files and additional operational data) on DEV-M

15:46: Destroy DEV-L instance, not starting.

15:52: Opened support ticket to restart original C2 instance (priority: High)

15:56: SSH-Connection to DEV-M instance gets closed while borg backup restore was running in that SSD instance.

15:58: Reconnect to DEV-M, restart borg backup restore, in screen session this time.

15:58: Support wrote:

I’ve performed some modifications on your DEV instance, and it may be online after the reboot that I’ve performed.

From my side, your DEV instance is back, and running

16:02: Respond to support ticket, asking to restart the C2 instance instead.

16:08: Borg backup restoration aborts, some important files are missing. Check that they are listed in the backup (borg list). Restart backup restore.

16:16: Support responds:

Due some events, we’re currently out of stock on this instance family. If you’ve just rebooted your instance, your physical hardware has been given to another customer which started his instance at same time.

Currently our engineering team hasn’t planned to restock it, because C2x instances are a deprecated product. They do not benefit from the latest functionalities (Hot snapshot, security group with Stateless rules) nor from the compatibility with > certain products of the Scaleway ecosystem.

We strongly recommend you to migrate your current C2x instance, to a DEV or GP instance, to keep a stable and reliable production environment. Our DEV and GP are powered by AMD EPYC brand new CPU, to give you more and more power.

If you need help to migrate your C1/C2x instance, let me know, the migration can be done in few clicks!

https://www.scaleway.com/en/docs/migrate-c2-arm64-to-virtual-instance-using-rsync/

16:17: Realize that the documentation the support linked to includes a step I was missing when creating the DEV-L instance with the backup: Setting a bootscript.

16:25: Newly created DEV-L instance with bootscript set does not come online.

16:25: Ask support for help starting the DEV-L instance

16:30: DEV-L instance comes online. Downtime finished.

Lessons learned

After DEV-L and thus pc-kombo came online I played through the scenario of having to restore from my backups, on a separate instance. Some of the lessons come from that exercise.

First, having an online backup was invaluable during this situation. It gave me confidence that no matter what happened, data loss would be minimal. I’m using the hidden borg/attic offer from rsync.net and pay less than $20 a year, definitely worth it.

However, that backup was incomplete. It did not include /etc/letsencrypt and some other recently populated data locations, like password protected goaccess reporting in /var/www/html. Also, one sqlite database was corrupt - adding it to the backup while it is in use can not work, writes during the transfer time will damage it. It was restorable (sqlite3 broken.db ".recover" | sqlite3 new.db), but that takes additional time and might not always be possible. The backup needs to be improved.

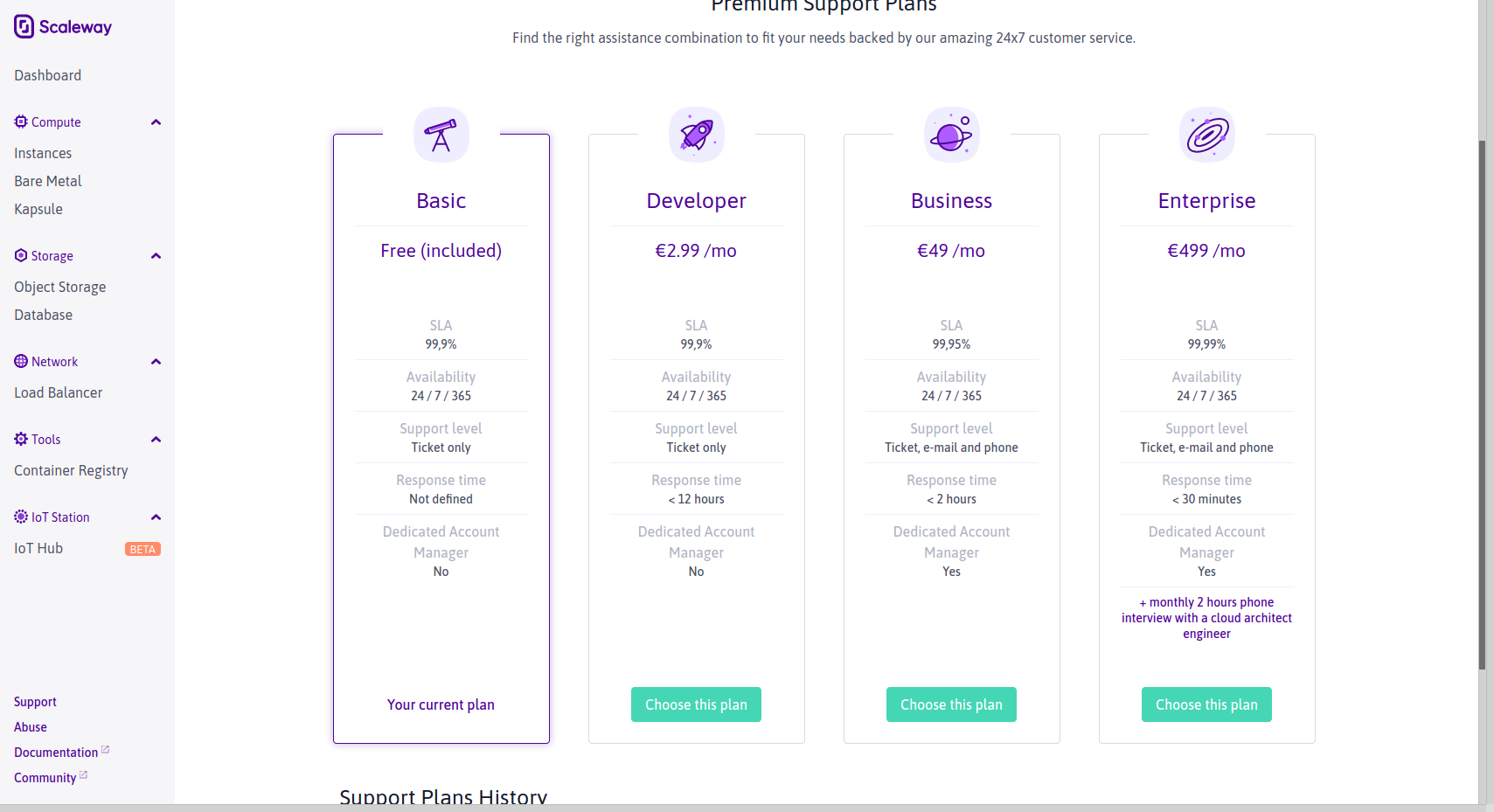

Second, I should have opened the support ticket earlier. Despite the small misunderstanding about which server to restart, the support was helpful and fast. Keep in mind that I did not pay for a support plan, Scaleway was under no obligation to react that fast to my ticket. Its reaction was excellent.

The floating IP feature Scaleway has was super helpful to quickly migrate the site from the broken C2 instance to the new DEV-L. Having to set a new IP at the DNS level would have been way more complicated.

However, Scaleway’s policy also caused this situation. Giving the C2 instance away during the few minutes it was powered down while having no reserve is frankly not acceptable. Especially given that powering it down seems necessary to follow the official manual to migrate to a different modern instance, to create the needed snapshot. As far as I remember, the language when trying to do a snapshot while it was online did not advise the standby mode, but a full shutdown. In any case, Scaleway should not have allowed a situation where the old C2 instance was not rebootable.

Third, restoring the files from the dev setup was a bad idea. The sshfs upload takes too much time. It’s better to completely focus on the faster borg backup.

But fourth, the borg backup even if complete is not enough. The restore process needs to take into account that the new server might be a different Linux distribution and that it has to be as fast as possible. The webapp behind pc-kombo is a Ruby/Sinatra-application (and this situation adds to the other negatives I identified about that approach, which to be clear, I apart from that love to work with). That means setting it up involves quite some system configuration. System dependencies need to be installed, it has to be decided whether to use rvm or not, gems need to be reinstalled, and so on. Doing all that work under pressure is painful. It would be so much easier if the application could be distributed as a single binary. Sadly, all projects I know of like ruby-packer and traveling-ruby that aimed to create such binaries from ruby project are abandoned and incomplete. To work for pc-kombo and most ruby projects, non-pure ruby gems like sqlite3-ruby need to be supported. I’m currently betting on AppImage being the best solution for that (starting point so far: a build script that provides ruby) and plan to invest more work there.

And those are all the lessons I could take from the downtime. Additionally I might share that it was really stressful. Thankfully not the first time I experienced something like this, I knew how to stay calm, but if it’s your own project and money on the line the stress level is automatically a lot higher than usual.

Very interesting read! Thanks for sharing. I recently wrote a bit on backups vs restore and you may get some ideas from the Docker-based solution I wrote: https://wasteofserver.com/restore-backups-ugly-duckling/

Keep sharing. Thanks!

>>58

That's interesting, thank you :)